What Is Claude? A Comprehensive Explanation of the New “Constitutional AI” Design and Its Potential

Artificial intelligence (AI) continues to evolve to meet a wide range of modern-day needs—workflow automation, data analysis, customer support, and more. Among the newest entrants, Claude, developed by Anthropic, has attracted attention as a large-language model (LLM) that prioritizes safety and ethics through a unique “Constitutional AI” approach. Competing with ChatGPT and Gemini, Claude positions itself as a highly trustworthy option for enterprises and researchers alike.

This article systematically explains everything from Claude’s core concepts and technical features to comparisons with other AI models, reasons for enterprise adoption, and key caveats.

1. What Is Claude?

1.1 Background of the Developer, Anthropic

Claude is built by Anthropic, a U.S. AI research company founded in 2021. Cofounders include former OpenAI researchers Dario Amodei (CEO) and Daniela Amodei (President), who helped develop the GPT-3 technology that powers ChatGPT.

Anthropic’s mission is to create safe and interpretable AI. The company aims to advance generative-AI responsibly by mitigating ethical risks (e.g., bias, harmful outputs) and focusing on trustworthy, explainable models.

1.2 Evolution of the Claude Series (Claude 1–3)

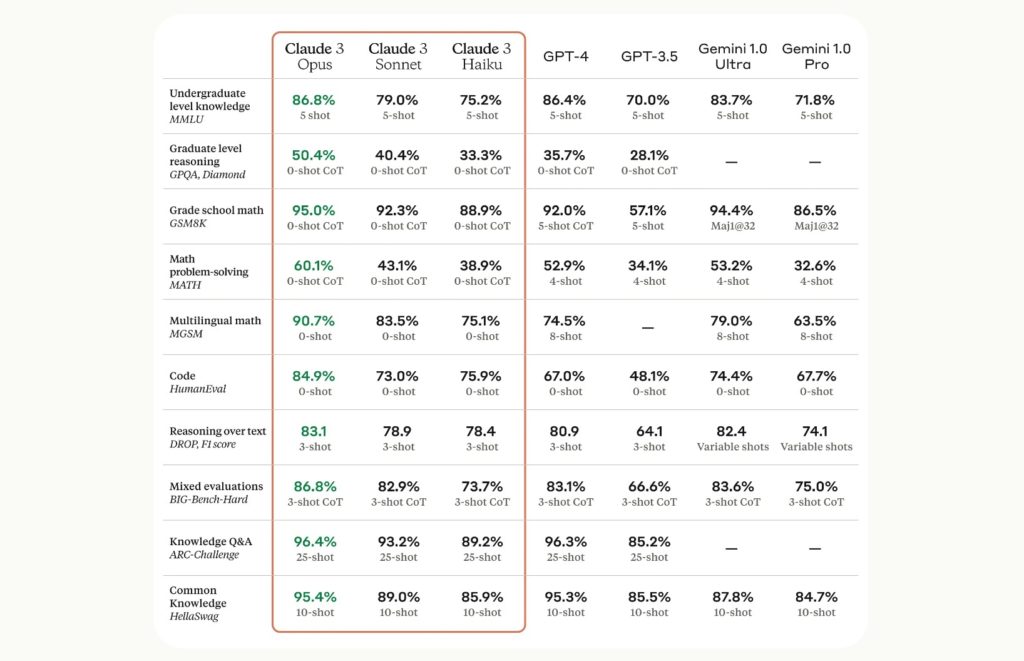

The Claude lineup has evolved from the first model to Claude 3.7 Sonnet, with increasing performance and functionality. The main versions are summarized below:

| Model | Release Date | Context Window | Key Features | Typical Use Cases |

|---|---|---|---|---|

| Claude 1 | Mar 2023 | 9 k tokens | First generation; safety-focused; basic chat & text generation | Personal use, early testing |

| Claude 2 | Jul 2023 | 100 k tokens | Improved long-form handling; code generation; document analysis | Initial enterprise pilots, research support |

| Claude 3 Haiku | Mar 2024 | 200 k tokens | Fast, low-cost, lightweight tasks | Customer support, light workloads |

| Claude 3 Sonnet | Mar 2024 | 200 k tokens | Balanced model; image analysis, coding, reasoning | Business operations, dev support |

| Claude 3 Opus | Mar 2024 | 200 k tokens | High performance; complex reasoning; long docs | Enterprise, research |

| Claude 3.5 Sonnet | Jun 2024 | 200 k tokens | Better image parsing, stronger reasoning, natural prose, coding aid | Advanced business & creative tasks |

| Claude 3.7 Sonnet | Feb 2025 | 200 k tokens | Hybrid reasoning, Claude Code, combines real-time responses with long-context handling | Real-time apps, high-level development |

Claude features an expanded context window (up to 200 k tokens), multimodal support (image parsing), and stronger ethical safeguards—ideal for corporate needs.

1.3 What Is Constitutional AI?

Constitutional AI is a training method in which the model evaluates and revises its own outputs against a predefined set of ethical principles. Instead of relying solely on human feedback, the AI autonomously selects more appropriate responses in line with those principles.

This mechanism maintains safety and utility while achieving highly reliable behavior. The approach is gaining attention as a new paradigm that emphasizes ethics and transparency in AI development.

Let’s now dive into Claude’s technical strengths and how they contrast with competing models.

2. Claude’s Technical Features and Strengths

2.1 Ultra-Long Context Handling (up to 200 k tokens)

Claude can process 200 000 tokens—roughly 150 000 words or a 400-page book—in one go. For example, it can analyze entire legal contracts or large codebases and answer detailed questions. On X (Twitter), users note that Claude’s long-context abilities are “revolutionizing code reviews.” This is especially useful in research and legal domains.

2.2 High-Precision Natural-Language Understanding & Reasoning

Across diverse domains, Claude delivers advanced natural-language understanding (NLU) and reasoning. The latest Claude 3.5 Sonnet outperforms peers on text-based analytics and prediction tasks, handling complex legal texts, scientific papers, and code generation.

Anthropic reports that Claude maintains consistent reasoning across multiple languages, excelling at zero-shot QA and math problem-solving.

2.3 Safety-First, Consistent Outputs

Thanks to Constitutional AI, Claude prioritizes safety and consistency. For sensitive prompts, it produces neutral, fact-based answers while avoiding offensive language or misinformation. For instance, during political discussions Claude removes biases and presents objective data—garnering trust in education and healthcare.

2.4 File & Document Support for Practical Workflows

Claude parses PDFs, CSVs, and images (up to 30 MB, 5 files) for summarization and Q&A. Example: extracting tables/graphs from a 100-page PDF to analyze financial trends. Robin AI leveraged Claude to cut contract-review time ten-fold—proving invaluable in legal, financial, and research settings.

2.5 API Integration & External-Tool Connectivity

Claude is available via AWS Bedrock and Google Vertex AI and integrates with Slack, Notion, and GitHub. February 2025’s Claude Code boosts GitHub code review and debugging. Teams can query Claude in Slack for real-time collaboration support.

3. Differences Among Claude, ChatGPT, Gemini, and LLaMA/Mistral

Claude’s strengths lie in safety, long-context handling, and enterprise-oriented design, while the other leading AI models employ different approaches and have distinct characteristics. In this section we compare Claude with ChatGPT (OpenAI), Gemini (Google), and LLaMA/Mistral (open-source) from the perspectives of philosophy, technology, and use cases.

Understanding each model’s areas of expertise and operational constraints will help you formulate the best selection or combination strategy for your own organization.

3.1 Differences Between Claude and ChatGPT

| Item | Claude | ChatGPT |

|---|---|---|

| Developer | Anthropic | OpenAI |

| Model Variants | Haiku, Sonnet, Opus, 3.5 / 3.7 Sonnet | GPT-4o, GPT-4, GPT-4o mini |

| Context Window | 200,000 tokens | 128,000 tokens |

| Safety | Strictly governed ethical responses via Constitutional AI | Uses RLHF; slightly higher misinformation risk |

| Strengths | Long-document processing, ethical responses, enterprise-grade security | Image generation, web search, voice interaction, creativity |

| Weaknesses | No image generation, no web search | Smaller context window, less-stable ethical responses |

| Integrations | GitHub, Slack, AWS Bedrock | Microsoft 365, Zapier, web search |

| Typical Uses | Long-form document analysis, code review, compliance-heavy work | Creative tasks, real-time information, general dialogue |

Claude excels in long-document processing and safety, while ChatGPT is strong in multifunctionality and creativity.

3.2 Philosophical and Structural Contrast Between Claude and Gemini

| Item | Claude | Gemini |

|---|---|---|

| Developer | Anthropic | |

| Model Variants | Haiku, Sonnet, Opus, 3.5 / 3.7 Sonnet | Gemini 1.5 Pro, Ultra, Flash |

| Philosophy | Emphasis on safety and ethics (Constitutional AI) | Democratizing information access, integrated web search |

| Context Window | 200,000 tokens | 1,000,000 tokens (1.5 Pro) |

| Safety | Strict ethical management via Constitutional AI | Safety filters and Google’s ethics standards |

| Strengths | Long-document processing, enterprise security, ethical responses | Web search, video handling, deep Google-ecosystem integration |

| Weaknesses | No web search, no image generation | Verbose answers, consistency challenges in niche languages |

| Integrations | GitHub, Slack, AWS Bedrock | Google Workspace, Vertex AI |

| Typical Uses | In-house data processing, compliance-focused tasks | Real-time information retrieval, customer support |

Claude is superior in ethics and security, whereas Gemini shines in web search and multimodality.

3.3 Technical Comparison of Claude and LLaMA / Mistral

| Item | Claude | LLaMA | Mistral |

|---|---|---|---|

| Developer | Anthropic | Meta AI | Mistral AI |

| Model Variants | Haiku, Sonnet, Opus, 3.5 / 3.7 Sonnet | LLaMA 2, LLaMA 3 | Mixtral, Mistral Large |

| License | Proprietary | Research-only | Open-source |

| Context Window | 200,000 tokens | 4,096 – 8,192 tokens | 8,192 – 32,768 tokens |

| Safety | Ethical outputs guaranteed by Constitutional AI | Limited safety controls | Risks inherent to open source |

| Strengths | Long-document processing, enterprise security, ethical responses | Customization for research | Cost efficiency, lightweight models |

| Weaknesses | Limited fine-tuning, no image generation | Not licensed for commercial use | Insufficient enterprise-grade security |

| Integrations | GitHub, Slack, AWS Bedrock | Local execution (Docker, etc.) | Local execution, Hugging Face |

| Typical Uses | Enterprise adoption, long-document analysis | Academic research, experimental projects | Small- to mid-size businesses, lightweight tasks |

Claude is optimized for enterprises; LLaMA suits research; Mistral prioritizes cost efficiency.

Claude leads in safety and long-context handling, while the other models emphasize creativity or customization. Choosing the right tool depends on your specific use case. Next, let’s see why enterprises select Claude.

4. Why Claude Is Chosen

4.1 Enterprise Trust: Security and Governance

Claude Enterprise complies with corporate IT security standards through SSO, role-based access control, and audit logs. Administrators can finely manage user actions and permissions, minimizing information-leak risks. Claude also meets GDPR and HIPAA requirements and guarantees that customer data is “not used for training,” providing high assurance when handling sensitive information.

4.2 Long-Context Advantage: Large-Scale Context Handling

Claude can maintain up to 200,000 tokens (up to 500,000 tokens in Enterprise), allowing entire documents or codebases—equivalent to several hundred pages—to be processed at once. This enables tasks like checking contract consistency, reviewing massive code repositories, or summarizing and comparing academic papers, which were previously difficult because the model could not “read everything at once.”

4.3 Coding Support: The Power of Claude Code

Claude Code assists with natural-language-driven code generation, debugging, review, and PR creation, and integrates with GitHub and CLI environments. Developers can issue plain-language commands over repository code, embedding AI directly into existing workflows to simultaneously accelerate development speed and improve quality.

4.4 Practical Utility: File Analysis and Flexible API Integration

Claude ingests PDFs, CSVs, images, and more to provide summaries or extract structured information. For example, it can pull tables and graphs from financial reports for trend analysis. Claude also connects with cloud AI platforms such as AWS Bedrock and Google Vertex AI, and integrates with tools like Slack, Notion, and GitHub. It even supports dashboard or audit-log outputs for operational monitoring.

5. Points to Note with Claude

5.1 High Refusal Frequency and Its Background

Claude frequently refuses sensitive prompts (e.g., political debates, aggressive speech) due to its Constitutional-AI-based ethics, resulting in a higher refusal rate than ChatGPT. Example: When asked for “an offensive joke,” Claude replies “I’m sorry, but I can’t comply with that.” Users must craft clear, ethical prompts.\

5.2 Balance Between Cautious Expression and Creativity

Following constitutional principles, Claude suppresses harmful, discriminatory, or extremist content, so its fictional or creative style may appear more structured and neutral than ChatGPT’s free-wheeling output. However, since Sonnet 4, flexibility has improved, striking a better balance between emotion, creativity, and safety.

5.3 Limits on Customization and Operability

Anthropic’s Claude does not support traditional fine-tuning. Instead, operations rely on prompt control and pre-settings (model selection: Haiku / Sonnet / Opus) within the Constitutional-AI framework. Unlike OpenAI’s Custom GPT, organizations cannot precisely retrain the model, but prompt engineering and higher-level API integration (e.g., GitHub) allow flexible workflows.

5.4 Language Biases and Differences

According to the arXiv paper “Beyond No: Quantifying AI Over-Refusal and Emotional Attachment Boundaries,” English Claude shows a refusal rate of 43 % in emotional-boundary control, while non-English usage is below 1 %. In Japanese, this may lead to over-cautiousness or misinterpretation of nuances. A second review or more context in prompts is recommended.

5.5 Limits in File and Image Processing

Claude accepts many formats—PDF, DOCX, CSV, TXT, HTML—but limits each file to 30 MB and each chat to 20 files. In Project (knowledge-base) mode, file count is unlimited, yet total content must fit within the context window.

- PDFs under 100 pages: images and tables are parsed.

- PDFs over 100 pages: only text is processed.

- Images embedded in other file types are generally ignored.

For images: JPEG, PNG, GIF, WEBP up to 30 MB and 8 000 × 8 000 px; ≥ 1 000 × 1 000 px is recommended for accuracy. Image generation is not supported—Claude is designed to read, not create, visuals.

6. Summary

Claude, Anthropic’s large-language model, features a unique Constitutional-AI approach that prioritizes safety and consistency. Long-document processing, file analysis, enterprise-grade security, and robust tool integrations make it increasingly popular among businesses and research institutions.

Conversely, lack of image generation, limited fine-tuning, and uneven Japanese support are caveats. When adopting Claude, compare its capabilities with other models and ensure alignment with your operational needs—considering purpose, required functions, and organizational readiness.

FAQs

Q1: How does Constitutional AI differ from RLHF (Reinforcement Learning from Human Feedback)

Constitutional AI, introduced by Anthropic, aims for AI to autonomously behave in line with human values and ethical principles. RLHF, by contrast, trains models using human-rated “desirable” responses as reward signals. RLHF can make the training process opaque and blur ethical principles, whereas Constitutional AI predefines transparent rules (e.g., human rights, respect, fairness) and has the AI self-evaluate and revise its outputs. This greatly improves consistency and explainability, facilitating trustworthy AI in enterprise and public sectors.

Q2: Where does Claude’s 200,000-token capacity help in practice?

Claude’s huge 200 k-token window—about 400 pages—lets it read an entire large document and perform deep analysis. Legal teams can load multiple contracts and spot discrepancies; engineers can map dependencies in huge codebases and detect latent bugs. Tasks that once took days now take minutes with Claude’s assistance.

Q3: Claude claims to be safer—how is that proven?

Anthropic states that harmful outputs (violence, discrimination, misinformation) in Claude 3.5 appear in less than 0.1 % of cases—extremely low for commercial use. Claude also explicitly refuses unsafe prompts based on its “constitution,” strengthening risk management, especially valuable in sectors like education, healthcare, and finance.

Q4: Claude handles images and PDFs—what limitations apply?

Claude supports image and document analysis: OCR on scanned contracts, graph summarization in PDFs, trend detection in financial charts, etc. Limitations: each image upload ≤ 30 MB and up to 5 files; unsuitable for large 3-D or ultra-high-res data. Claude cannot generate images—unlike ChatGPT or Gemini, it focuses on interpreting existing information.

Q5: Claude is said to be hard to customize—how can I optimize it for my workflows?

Because Claude lacks fine-tuning or retraining, full custom models like OpenAI’s Custom GPT aren’t possible. Instead, high customization comes from prompt design and tool integration. Link Claude to Slack or GitHub, embed formatting rules in prompts to automate tasks and ensure consistent reports, and balance cost vs. accuracy by choosing among Haiku (fast), Sonnet (balanced), and Opus (high-precision).

EN

EN JP

JP KR

KR

![[For Enterprises] Adoption Rate of Microsoft Copilot and 8 Key Business Use Cases](/sites/default/files/styles/medium/public/articles/%5BFor%20Enterprises%5D%20Copilot%20%E2%80%94%20Corporate%20Adoption%20Rate%20and%208%20Business%20Use%20Cases.png?itok=6MVSPst9)

![[For Enterprises] Grok — Corporate Adoption Rate and 8 Business Use Cases](/sites/default/files/styles/medium/public/articles/%5BFor%20Enterprises%5D%20Grok%20%E2%80%94%20Corporate%20Adoption%20Rate%20and%208%20Business%20Use%20Cases%20%281%29.png?itok=3Vu1lBCh)

![[For Enterprises] Claude — Corporate Adoption Rate and 8 Business Use Cases](/sites/default/files/styles/medium/public/articles/%5BFor%20Enterprises%5D%20Claude%20%E2%80%94%20Corporate%20Adoption%20Rate%20and%208%20Business%20Use%20Cases.png?itok=tc2aEIEt)