LangChain and LlamaIndex | Differences and How to Choose in RAG Design Open 1. What Is LangChain? Configuration Options

With advances in large language models (LLMs), the scope of generative AI use in enterprises and research institutions has expanded more than ever. However, simply using an LLM as-is is often insufficient to meet real-world business requirements. For example, when you want to incorporate the latest information or need responses grounded in internal knowledge, an LLM alone cannot handle it. In addition, for long-text processing and complex context understanding, gaps in knowledge and hallucinations (hallucination) become issues.

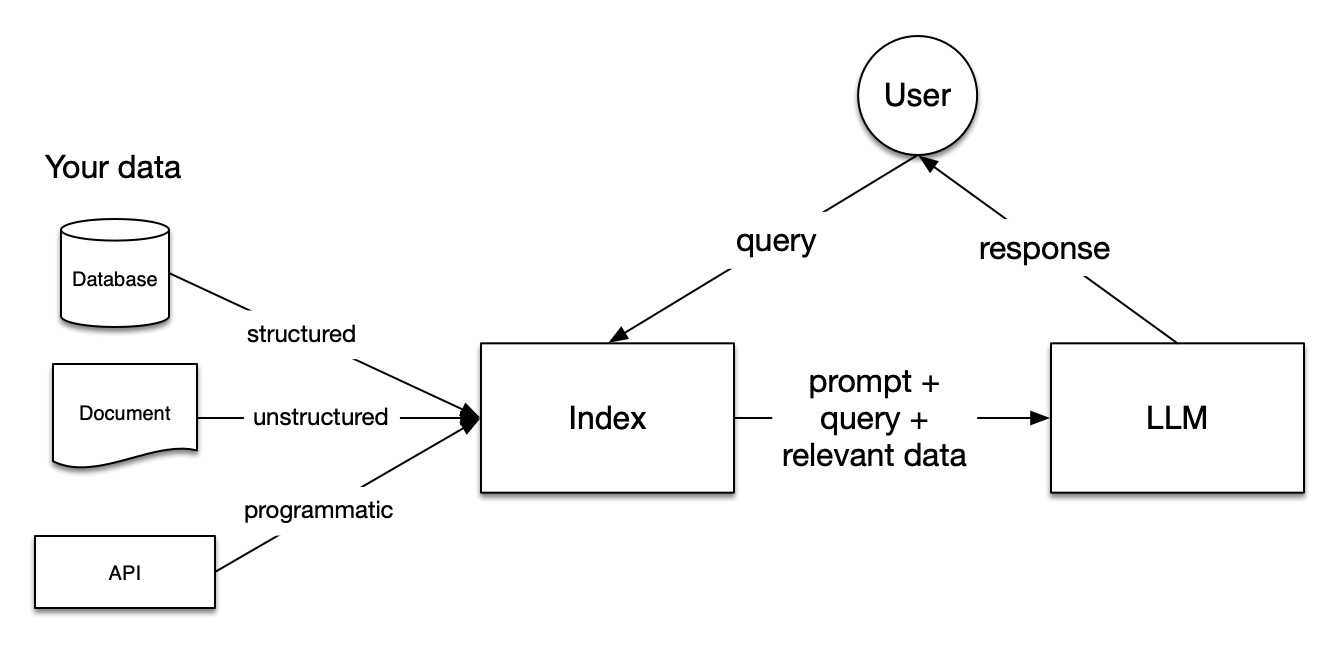

This is where RAG (Retrieval-Augmented Generation) comes in. RAG is an approach that combines “retrieval” and “generation.” By fetching external data and integrating it into the LLM, it produces more reliable responses. This design is attracting attention in a wide range of fields, including internal information search, automated FAQ responses, legal document review, and research support.

In this article, we compare two representative frameworks that support RAG: LangChain and LlamaIndex. We organize their design philosophies, architectures, strengths, and weaknesses, and explain how to make the best choice depending on your project. We also include ideas and tips for practical applications so readers can make strategic decisions tailored to their own use cases.

1. What Is LangChain?

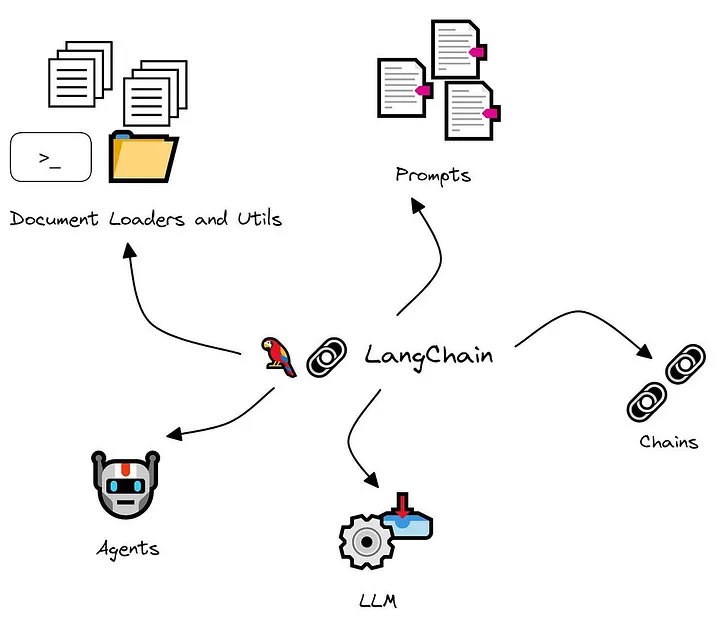

LangChain is a framework for developing applications that leverage generative AI. It emerged in 2022 and quickly spread among developers and enterprises worldwide. At its core is “a mechanism that places the LLM at the center and integrally controls complex processes.”

Traditionally, calling an LLM on its own yields only single-shot responses, which is inconvenient for direct application to real-world tasks. LangChain introduces the concept of a “chain,” enabling tasks to be processed step by step. Through “agents,” it also allows the LLM to dynamically proceed with tasks by selecting the optimal tool according to the situation.

With this mechanism, LangChain has gone beyond being a mere API wrapper and has become established as an “intelligent control framework” that enables business automation and knowledge integration.

1.1 Features

Summarizing LangChain’s features in a table makes the big picture easier to grasp.

| Feature | Description |

|---|---|

| Chain | Break down and connect tasks to execute them step by step |

| Agent | LLM selects tools and proceeds according to the situation |

| Module integration | Flexible connections to APIs, databases, and external cloud services |

| Prompt management | Templating enables consistent prompt design |

| Memory function | Maintains conversation history for long-term context understanding |

| Evaluation tools | Equipped with mechanisms for monitoring execution and improving it |

| Logging | Each process can be tracked, offering high auditability and reproducibility |

| Ecosystem | Active community and abundant extension packages |

Combined, these features allow LangChain to function as the “brain of the entire application.”

1.2 Benefits and Constraints

When introducing LangChain, the advantages and challenges can be organized as follows.

| Benefits | Constraints |

|---|---|

| Flexible workflow construction is possible | Steep learning curve; difficult for beginners |

| Can integrate with diverse external services | Risk of bloat due to over-engineering |

| Dynamic control via agents | Overhead can arise due to complexity |

| Rich community and plugins | Full-scale adoption requires strong engineering capabilities |

| Advanced responses through prompt management and memory | Settings tend to become complex |

| Easy improvement via monitoring and logs | Operational costs can be high |

LangChain is “powerful but hard to handle,” making it suited for teams with the necessary skills and resources.

2. What Is LlamaIndex?

LlamaIndex (formerly GPT Index) is an indexing foundation that efficiently provides external data to LLMs. By integrating various data sources and converting them into searchable formats, it enables LLMs to smoothly utilize the knowledge they need.

Whereas LangChain’s strength lies in “control,” LlamaIndex specializes in “organizing data and improving retrieval efficiency.” This makes it highly attractive for implementing RAG simply and efficiently.

2.1 Features

LlamaIndex’s features can be broadly summarized as follows.

| Feature | Description |

|---|---|

| Data connectors | Integrate PDF, Notion, Google Drive, SQL, and more |

| Index structures | Flexibly design tree, list, graph, and other structures |

| Query engine | Returns optimal results according to search requests |

| Caching | Improves reusability and speeds up responses |

| Transparency | Visualizes data flow, making debugging easy |

| Extensibility | Switchable search algorithms |

| Scalability | Strong at handling large volumes of documents |

| Simple design | Low learning cost and quick to adopt |

As a result, LlamaIndex has established itself as a “hub for data infrastructure.”

2.2 Benefits and Constraints

| Benefits | Constraints |

|---|---|

| Efficient, specialized in data connection and search | Weak in agent control, unsuitable for dynamic flows |

| Easy to adopt and learn | Not good at controlling the entire application |

| Choice of diverse index structures | Weaker integration capacity compared to LangChain |

| Fast and accurate search | Lacks features for expansive business processes |

| Easy debugging and visualization | Narrower range of service integrations |

| High scalability | Additional build-out required for production operations |

LlamaIndex excels as a “simple and efficient data processing tool,” but it’s difficult to build an entire application with it alone.

3. Comparison of LangChain and LlamaIndex

LangChain and LlamaIndex are both key frameworks supporting RAG (Retrieval-Augmented Generation), but they have different characteristics in design philosophy, architecture, developer experience, and performance. The table below organizes their differences at a glance.

| Item | LangChain | LlamaIndex |

|---|---|---|

| Central concept | Control and orchestration | Data connectivity and efficiency |

| Architecture | Complex flow control via chains + agents | Simple design via indexes + query engine |

| Primary role | Manage workflows as the brain of the entire system | Organize and retrieve data as the foundation of information supply |

| Strengths | Flexibility, rich features, external integration power | Simplicity, efficiency, stable retrieval performance |

| Weaknesses | High learning cost; designs tend to become complex | Limited control; unsuitable for complex automation |

| Developer experience | Feature-rich but high barrier to mastery | Easy to learn; suited to data-centric processing |

| Performance | Accuracy and speed vary by implementation; tuning required | Consistently high retrieval accuracy and lightweight, fast processing |

| Scalability | Supports large-scale deployment but requires tuning | Strong stability in data-centric projects |

| Applicability | Business process automation and complex control tasks | Use cases centered on knowledge search and document organization |

LangChain is strong for “large-scale systems requiring complex control and integration,” while LlamaIndex is suitable for “projects that handle data simply and efficiently.” The two are more complementary than competitive; choosing appropriately by use case will maximize the effectiveness of RAG.

4. Guidelines for Choosing Between LangChain and LlamaIndex

The choice between LangChain and LlamaIndex depends heavily on the project’s purpose, scale, and resources.

For small-scale PoCs and rapid validation phases, LlamaIndex—with its low learning cost and easy adoption—is appropriate. Examples include “internal FAQ search” or “search assistance for specific documents,” where you want to test quickly within a clear scope.

Conversely, for projects requiring complex business automation or integration with multiple systems, LangChain is effective. In cases such as automating an end-to-end flow—“handling inquiries + connecting to external APIs + generating reports”—agent control shows its strength.

5. Ideas for Leveraging LangChain and LlamaIndex

LangChain and LlamaIndex both function effectively on their own, but in real projects they often produce synergies when combined. Below, we explain several usage ideas in separate paragraphs and organize them in tables where appropriate.

5.1 Knowledge Search System

In enterprises and organizations, massive document assets are often difficult to utilize as-is. With LlamaIndex, you can index PDFs, Word files, spreadsheets, and more, organizing them into a searchable form. LangChain can then receive the search results and perform additional processing—such as summarization, translation, and classification—allowing the system to function as a “knowledge utilization platform” that goes beyond simple search.

| Role division | LlamaIndex | LangChain |

|---|---|---|

| Data processing | Index documents and optimize retrieval | - |

| Response generation | - | Summarization, translation, classification, and other transformations |

| User experience | Provide foundational search | Improve satisfaction with intent-aligned responses |

5.2 Customer Support AI

In customer support, it’s crucial to quickly look up FAQs and manuals. By organizing this information with LlamaIndex, accurate knowledge is always available. On top of that, leveraging LangChain enables automation of conversation flows and integration with multiple systems based on the customer’s questions, providing a more human-like support experience.

Organized by perspective, applications in customer support look like this:

| Perspective | Role of LlamaIndex | Role of LangChain |

|---|---|---|

| Knowledge base | Search across FAQs and manuals | - |

| Response control | - | Control conversation flows and API integrations |

| Efficiency | Shorten search time | Reduce workload through automation |

5.3 Research Support Tools

In research, extracting needed information quickly from vast numbers of papers and materials is a major challenge. LlamaIndex efficiently indexes literature databases and improves retrieval performance. Combined with LangChain, you can summarize search results, compare multiple papers, and automatically generate literature reviews. This shortens the time researchers spend on information gathering and lets them focus on more creative work.

5.4 Legal Document Review

Legal documents—such as contracts and terms—are complex and voluminous. By using LlamaIndex, you can organize large numbers of contracts into a searchable form. Processing those results with LangChain to extract risk areas and compare against other contracts greatly improves review efficiency.

This use case can be summarized as follows:

| Phase | Contribution of LlamaIndex | Contribution of LangChain |

|---|---|---|

| Information organization | Make contracts searchable | - |

| Review | - | Extract and compare risk sections |

| Efficiency | Streamline document management | Automate analysis processes |

5.5 Multimodal Applications

As a future application, RAG systems that handle data beyond text are conceivable. For instance, you could use LlamaIndex to organize transcribed audio data or image captions, and have LangChain integrally control them to enable multimodal applications. This could connect to next-generation customer experiences and new forms of research support.

6. Conclusion

LangChain and LlamaIndex each play different roles in supporting RAG design. LangChain is a framework for application control and workflow design, while LlamaIndex is a foundation for data connectivity and retrieval efficiency.

They are not rivals but complementary. By assessing your project objectives and team skill set—and using them individually at times or in combination at others—you can realize more practical and reliable RAG systems.

EN

EN JP

JP KR

KR

![[For Enterprises] Adoption Rate of Microsoft Copilot and 8 Key Business Use Cases](/sites/default/files/styles/medium/public/articles/%5BFor%20Enterprises%5D%20Copilot%20%E2%80%94%20Corporate%20Adoption%20Rate%20and%208%20Business%20Use%20Cases.png?itok=6MVSPst9)

![[For Enterprises] Grok — Corporate Adoption Rate and 8 Business Use Cases](/sites/default/files/styles/medium/public/articles/%5BFor%20Enterprises%5D%20Grok%20%E2%80%94%20Corporate%20Adoption%20Rate%20and%208%20Business%20Use%20Cases%20%281%29.png?itok=3Vu1lBCh)

![[For Enterprises] Claude — Corporate Adoption Rate and 8 Business Use Cases](/sites/default/files/styles/medium/public/articles/%5BFor%20Enterprises%5D%20Claude%20%E2%80%94%20Corporate%20Adoption%20Rate%20and%208%20Business%20Use%20Cases.png?itok=tc2aEIEt)